If you are looking for a way to test your JSON Schemas, you might be interested in the new JSON Schema tester from JSONBuddy. This tool allows you to validate your JSON Schemas against any number of JSON test data files, and check for consistency, correctness and coverage. In this blog post, we will show you how to use this tool and what benefits it can bring to your JSON Schema development.

What is a JSON Schema tester?

A JSON Schema tester is a tool that helps you to verify how a set of data files are validated against your JSON Schema resources. This is especially helpful during the design phase of the JSON Schema. The other way around, a JSON Schema test tool can also help you to ensure that your JSON data files match the expected structure and constraints defined by your JSON Schemas.

There are many online JSON Schema validators available. However, these online tools have some limitations. For example, they may not support the latest version of the JSON Schema specification, they may not allow you to test multiple JSON data files at once, or they may not provide detailed feedback on how well your JSON data covers your JSON Schema definitions.

That’s why JSONBuddy has developed a new JSON Schema test tool that aims to overcome these limitations and provide a more comprehensive and convenient way to test your JSON Schemas.

How to use the JSON Schema test tool from JSONBuddy?

JSONBuddy is a desktop application that runs on Windows. You can download it for free from https://www.json-buddy.com.

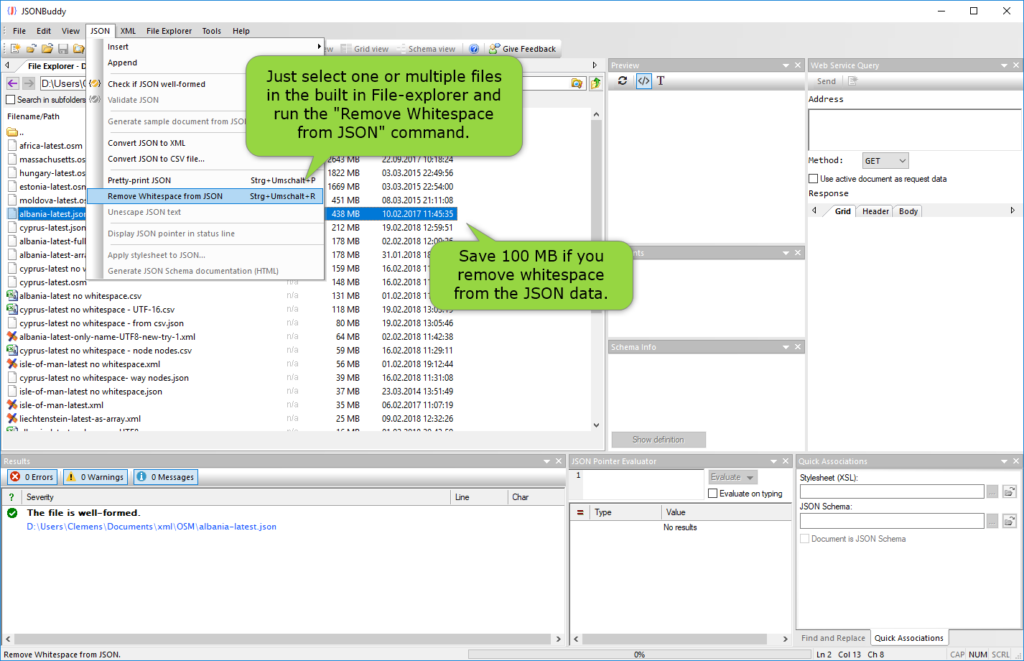

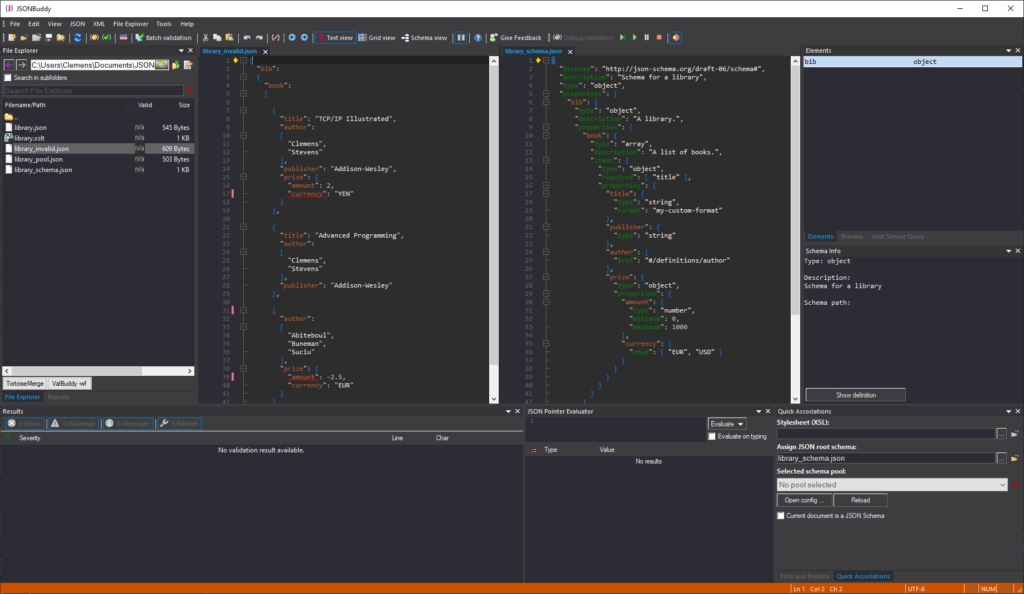

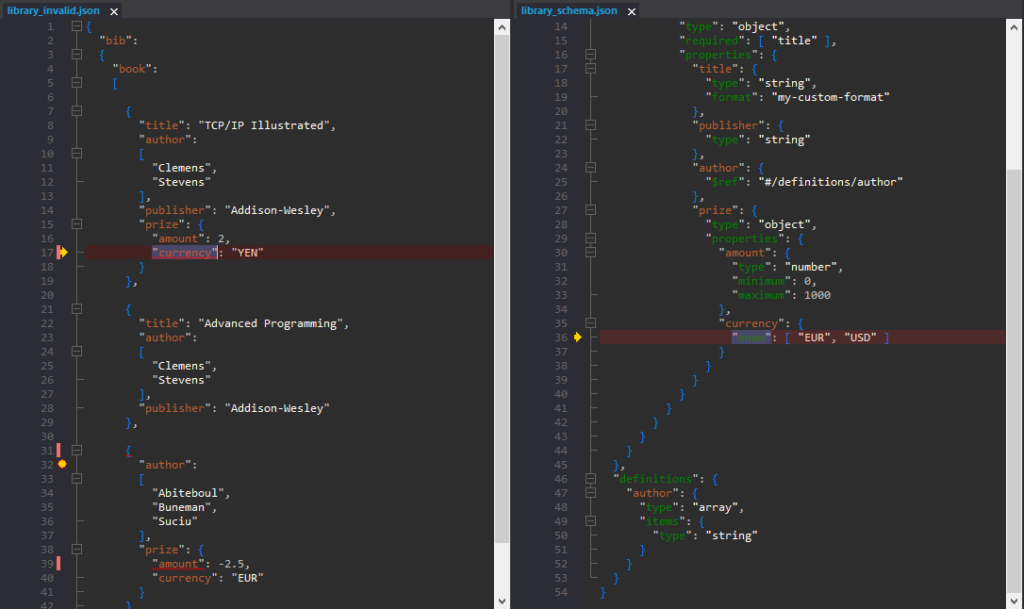

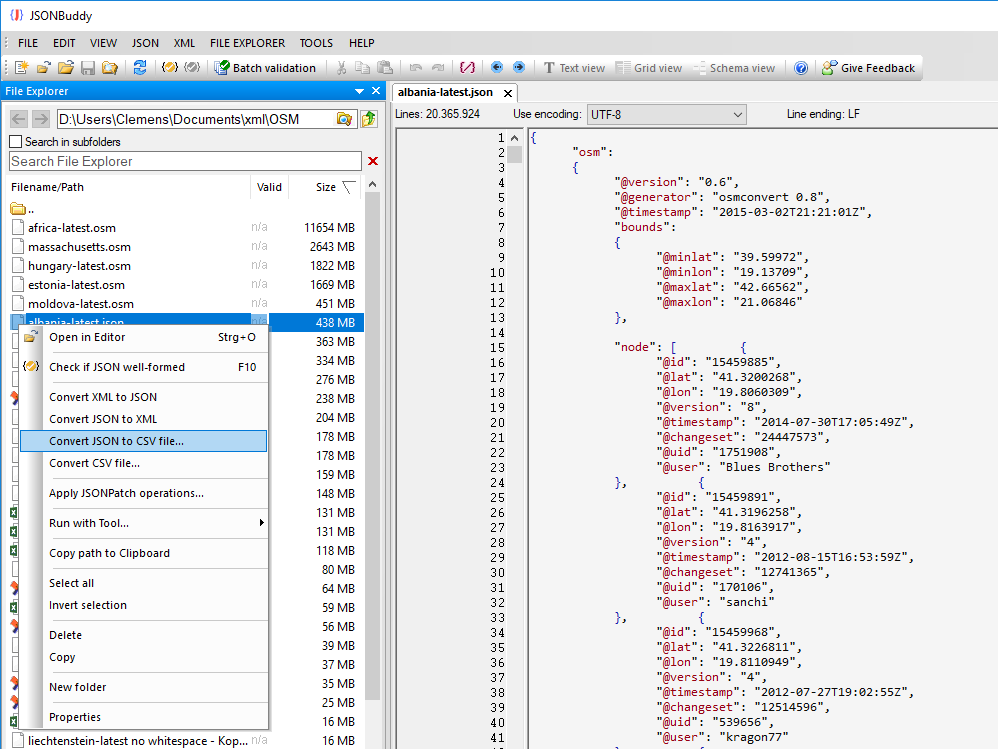

To use the tool, you need to have either a JSON Schema file, a JSON Schema pool and one or more JSON data files that you want to test against it. You can either create them in the built-in editor of JSONBuddy, or import them from your local or remote sources. It is only required, that the test data files are accessible in a single local folder.

Once you have your files ready, you can start testing them by following these steps:

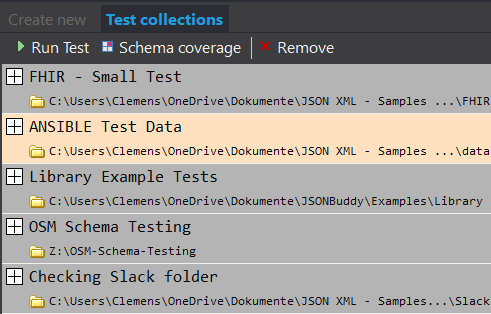

- Activate the Testing pane from the user-interface and bring the “Create new” tab to the front.

- Assign a descriptive name for your schema test entry. A good name should be clear, concise and meaningful to help you identify the test later.

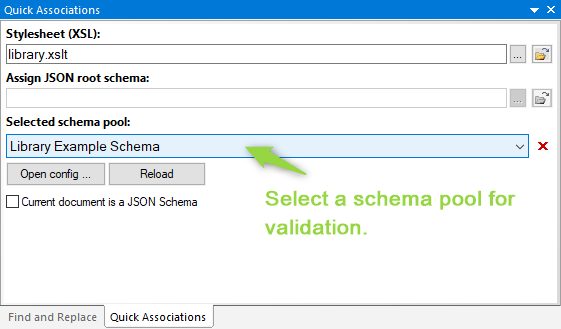

- Set the JSON Schema resource. You can either select a predefined JSON Schema file

or pick a schema pool that can contain multiple schema resources. - As a final step, we choose the folder with the test data that we want to quickly and repeatedly validate against the selected JSON Schema resource.

- Click on the Add test button to add your JSON Schema file and your JSON data files.

- Switch to the Test collections tab to see a list of already added test entries.

- Select an entry and click on the Run Test button to begin the validation process. The tool will check each JSON data file against the selected JSON Schema resource.

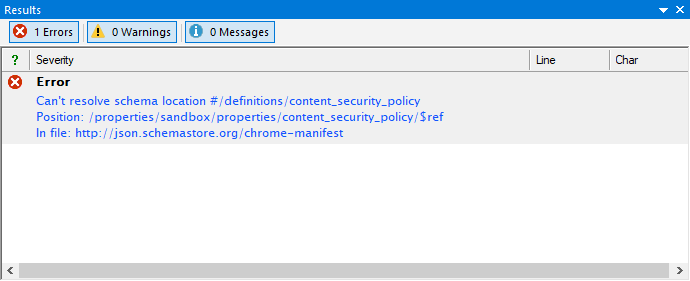

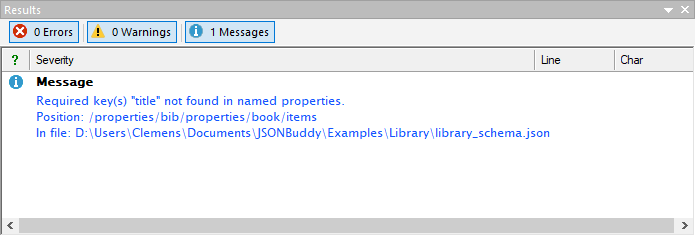

- After the test is completed, you can examine all validation

results in detail. A separate log is saved for each test run to preserve the test data. This will show you the validation errors, warnings and processing information for each data file. - Optionally, you can set a reference result for each JSON Schema test entry by clicking on the Set as reference button. This will allow you to compare ongoing tests with the reference result and see if there are any changes or errors.

- You can also view the schema definition coverage by clicking on the Schema Coverage button. This will show you which parts of the used schemas are checked by the test data, and which parts are not covered.

What are the main features of the JSON Schema test tool from JSONBuddy?

The JSON Schema test tool from JSONBuddy has several features that make it stand out from other online validators. Here are some of them:

- It supports all versions of the JSON Schema specification, from Draft 4 to Draft 2020-12.

- It allows you to test multiple JSON data files at once, without having to paste or upload them individually.

- It allows you to set a reference result and compare ongoing tests with it. This can help you to check for consistency and correctness of your data over time.

- It collects schema definition coverage information and shows you which parts of your schemas are tested by your data, and which parts are not. This can help you to improve your schema design and completeness.

- It provides a summary report with one click, showing you the overall status of your tests, the number of errors and messages, and the coverage.

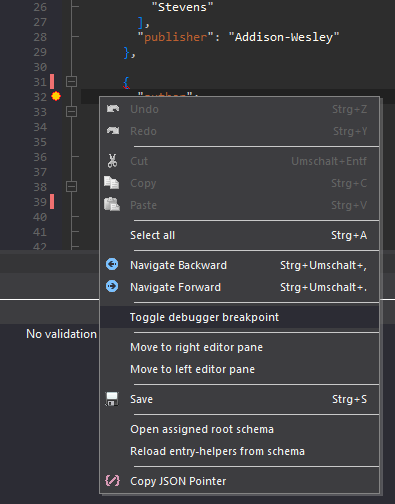

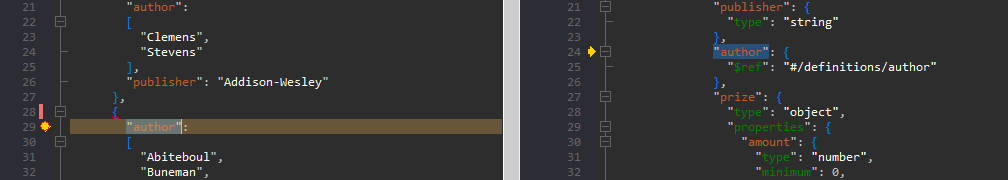

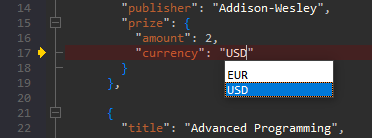

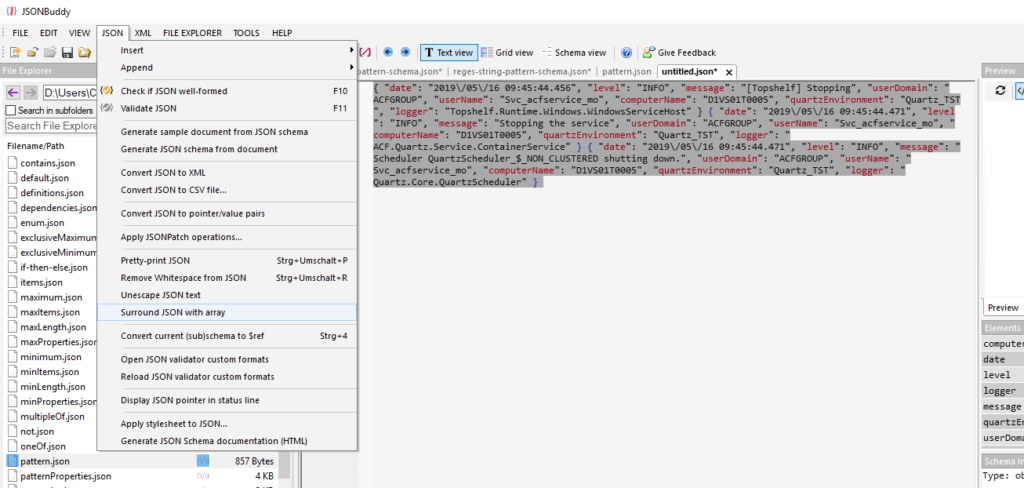

- It integrates with the built-in editor of JSONBuddy, allowing you to edit your schemas and data files easily and see the validation results in real-time.

Why should you use the JSON Schema test tool from JSONBuddy?

The JSON Schema test tool from JSONBuddy is a powerful and convenient way to test your JSON Schemas and ensure that they are valid, compatible and complete. By using this tool, you can:

- Save time and effort by testing multiple files at once

- Avoid errors and inconsistencies by comparing tests with reference results

- Improve your schema design and quality by collecting coverage information

- Edit your schemas and data files with ease and see the validation results instantly

If you are interested in trying out the JSON Schema test tool from JSONBuddy, you can download it for free from https://www.json-buddy.com. You can also find more information and tutorials on how to use the software on the website.