A customer was looking for help how to convert big JSON data to CSV using JSONBuddy. In general, the JSON editor offers several functionalities without the need to load the document into the tool (like pretty-printing and removing any whitespace). This allows the processing of much larger data as usually supported by other software. The JSON input of the customer was about 360 MB with millions of lines. For this article, I’m using a sample document of more than 400 MB.

So this is my recommendation on how to convert a large input file using JSONBuddy:

- Open the JSON document in the editor using the Large File view. This way you can check the structure of the JSON data and you can see the JSONPointer location to set the starting point for the conversion. In addition, the conversion also supports converting JSON input starting with a top-level array.

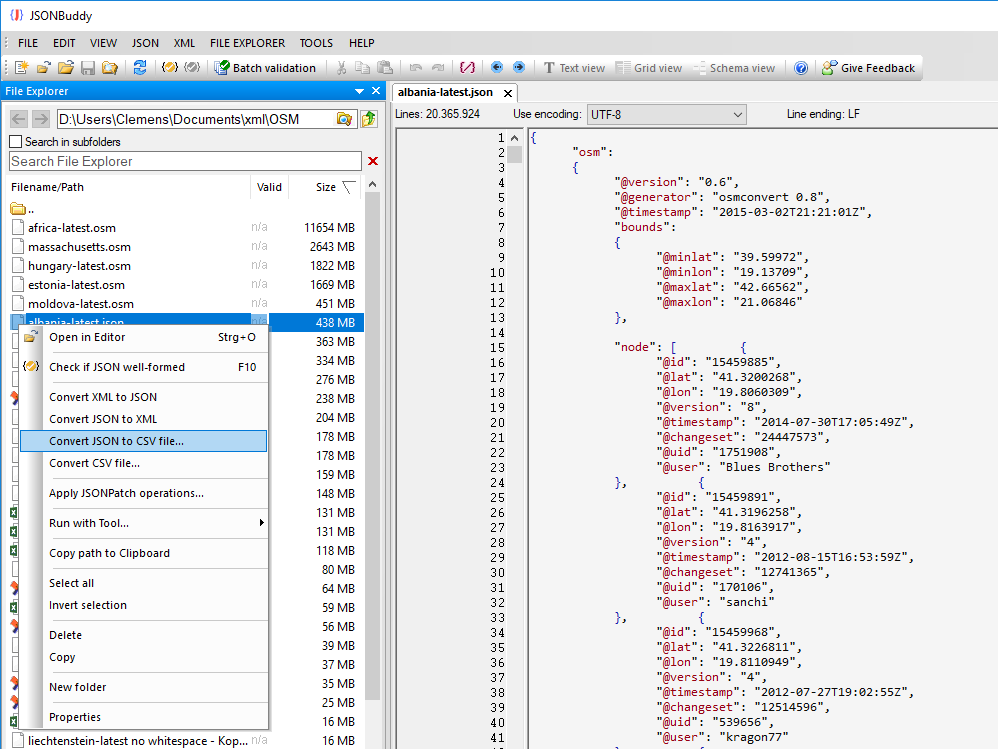

- Use the “JSON to CSV file…” command from the built-in File Explorer window. In this case, the editor doesn’t load the whole document at once. However, it can take a while until the following conversion dialog is displayed. My middle-class PC shows the dialog after 80 seconds for the 438 MB sample file below:

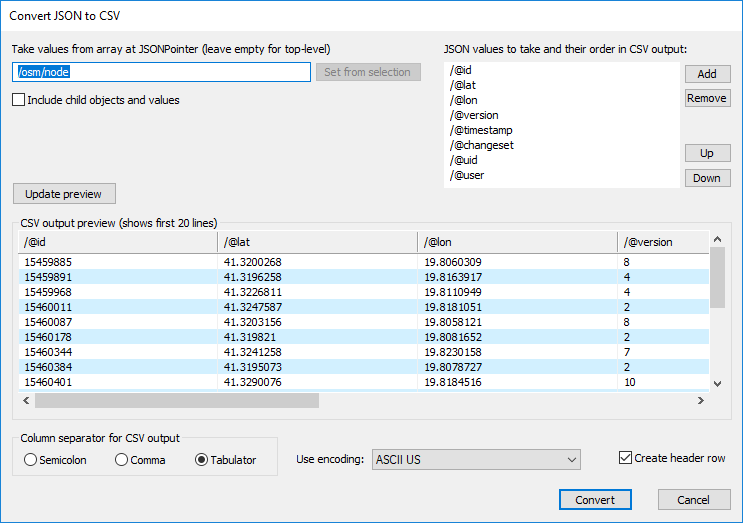

- The conversion dialog is used to set the starting point, the columns, and the output format. Please note that the list of JSON values to the right is only updated after you moved the input focus away from the JSONPointer edit field at the left:

- After clicking “Convert”, the output CSV is written as a new document next to the JSON input with .csv as the file extension. This takes about 110 seconds on my system and generates 130 MB of CSV data.

- Afterward, you can load the CSV as plain text into the editor. Again using the Large File view.